The Role of Artificial Intelligence in Cybersecurity - ChatGPT

Artificial intelligence (AI) is becoming an increasingly important game changer in the field of cybersecurity. With the rise of advanced cyber threats, traditional security measures are no longer enough to protect against attacks. AI offers a way to enhance cybersecurity defences by automating the detection and response to threats, analysing large amounts of data, and predicting potential attacks. In this article, we will explore the role of ChatGPT in cybersecurity and its impact on the field.

Interest in ChatGPT

Launched in November 2022, ChatGPT is an artificial intelligence chatbot developed by OpenAI using a sophisticated algorithm and deep learning techniques to analyse and understand vast amount of text data which then generate coherent, contextually appropriate responses to a wide variety of questions and prompts. According to a UBS study, ChatGPT is estimated to have reached 100 million monthly active users in just two months after its launch, making it the fastest-growing consumer application in history.

Figure 1: An example of using ChatGPT

The reason why ChatGPT is generating so much interest is because it is a powerful language model that can understand and generate natural language text in a way that is remarkably close to how humans communicate. One of the most impressive aspects of ChatGPT is its ability to learn from the vast amount of data that it has been trained on which allows it to generate responses that are not only accurate but also creative and surprising. This makes it an incredibly versatile tool for a wide range of applications, including customer service, language translation, content creation, and much more.

Another reason why Chat GPT is generating so much interest is that it represents a significant step forward in the development of AI and machine learning. With more companies and organisations looking to leverage on the power of AI, emerging technology tools like ChatGPT are becoming increasingly valuable and are likely to play a significant role in shaping the future of technology and business.

ChatGPT 4.0

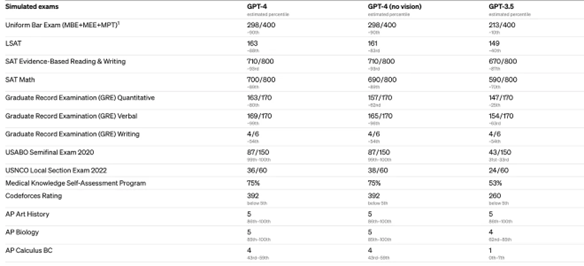

On 14th March 2023, OpenAI released the highly anticipated GPT-4 that is designed to create human-like text and generate images and computer codes. GPT-4 improves from previous models regarding the factual correctness of answers which is observed by seeing how well they can perform on standardised tests, like the SAT and the bar exam as compared to the previous GPT model.

Figure 2: Sample of simulated exam results of GPT-4 by OpenAI

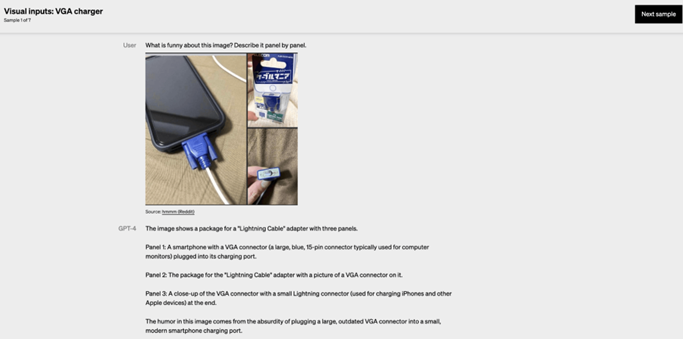

Another major upgrade is that GPT-4 is the ability to use image inputs (not available to the public yet) and text. Users can specify any vision or language task by entering interspersed text and images. An example that highlights GPT-4 correctly interpreting complex is shown as follows:

Figure 3: Sample of simulated results via Visual input of GPT-4 by OpenAI

Potential Usage of ChatGPT in bolstering cybersecurity

Below are some potential ways that ChatGPT 4 and beyond could enhance cybersecurity:

Automated threat detection and response: ChatGPT could be used to monitor networks, endpoints, and other IT systems to identify potential threats. AI systems learn from past attacks and could develop models to detect new attacks which allows for faster detection and response times. Additionally, AI could automate incident response, reducing the time it takes to remediate an attack.

Big data analysis: With the growth of big data, cybersecurity teams need to analyse large volumes of data to detect patterns and identify potential attacks. ChatGPT could be used to analyse data that includes network traffic, log files, and user behavior, to identify anomalies that may indicate a threat.

Predictive analytics: ChatGPT could be used to predict potential attacks based on historical data and patterns. These predictive analytics could help cybersecurity teams to stay ahead of attackers and proactively take steps to prevent any attack from happening.

Security Automation: ChatGPT could be integrated into security automation workflows to perform routine tasks, such as scanning logs for suspicious activity, alerting security teams of potential threats, and generating incident reports.

Threat intelligence: ChatGPT could analyse threat intelligence feeds that could identify new and emerging threats. ChatGPT could also be used to correlate threat intelligence with other data sources to identify potential attacks.

Vulnerability management: ChatGPT could be used to identify vulnerabilities in IT systems, such as outdated software or unpatched systems, and recommend possible remediation actions. This could help to prevent attacks that exploit known vulnerabilities.

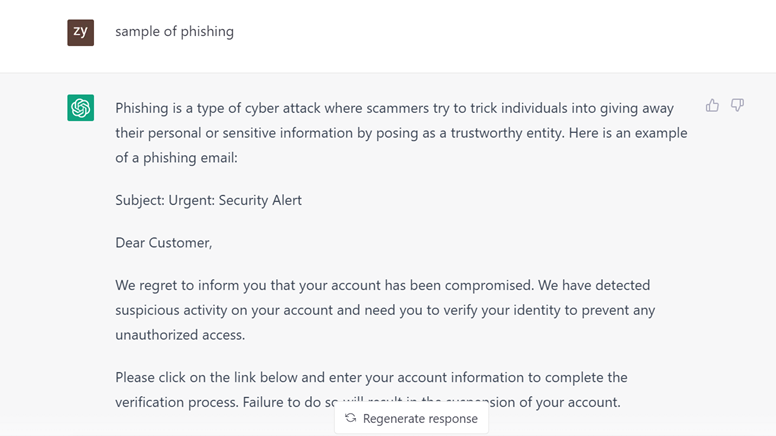

ChatGPT could also be used to improve cybersecurity awareness and training. It can be programmed to generate phishing emails that serve as a form of training material to train employees on how to spot and report phishing attempts. ChatGPT could also generate simulated cyber attacks and this helps to train incident response teams on how to respond to real-world cyber attacks.

Drawbacks of ChatGPT on Cybersecurity

Despite its increasing benefits, there are also concerns about the use of AI such as ChatGPT in cybersecurity. It is possible that malicious actors could attempt to use AI language models like ChatGPT to conduct cyber attacks, such as through social engineering or creating convincing phishing emails or messages.

Figure 4: Using ChatGPT to generate Phishing Email

As we observed in ChatGPT 4 where AI becomes more advanced, it may become increasingly difficult for traditional cybersecurity measures to detect and mitigate AI-generated attacks. The following are some examples of advanced cyber threats that are of concern:

Adversarial Machine Learning: Adversarial machine learning is the process of training AI models to generate adversarial examples where these are inputs specifically designed to deceive and manipulate the AI model. Attackers could use adversarial examples to bypass security measures, such as malware detection systems and spam filters.

Deepfakes: Deepfakes are AI-generated images, videos, or audio recordings that are manipulated to deceive people into thinking that they are real. Attackers could use deepfakes to create fake news, phishing scams, and social engineering attacks.

AI-Assisted Social Engineering: AI-assisted social engineering is the use of AI technologies, such as natural language processing and chatbots, to create personalised and convincing social engineering attacks. Attackers could use these techniques to trick users into divulging sensitive information, such as login credentials and personal data.

AI-Enhanced Malware: AI-enhanced malware is malware that uses AI and ML techniques to evade detection and propagate itself more effectively. For example, attackers could use AI to automatically generate and mutate malware code, making it very difficult for security tools to detect and block.

Furthermore, the widespread use of chatbots and virtual assistants like ChatGPT could potentially increase the attack surface for cyber criminals. Chatbots could be exploited to extract sensitive information or to gain unauthorised access to systems. It is important to ensure that appropriate security measures are in place to protect against such attacks.

Countermeasures Against AI threats

As AI technologies such as ChatGPT continue to advance, there is a growing concern about the potential risks and threats that they pose to society. Here are some ways to protect against AI threats:

Develop AI Ethical Guidelines: Establishing ethical guidelines for AI development and deployment is essential. These guidelines should address issues such as transparency, accountability, and data privacy protection.

Research Investment: Investing in research to better understand the potential risks and impact of AI is important. This research helps to identify potential threats and develop strategies to mitigate them.

Develop AI Governance Frameworks: Developing governance frameworks for AI could help ensure that AI is developed and deployed in a responsible and safe manner. These frameworks include standards, policies, and regulations that govern the use of AI. Regular security audits should also be conducted to identify any potential vulnerabilities or areas for improvement. This includes reviewing the system's configuration, access controls, and data handling practices.

Educate the Public: Educating the public about AI is important as it could raise awareness about the potential risks and benefits of AI, helping them to make informed decisions. Employees who work with AI systems should also receive training and awareness programs to learn about the potential threats and ways to mitigate them. This includes training on secure coding practices, safe data handling practices, and how to identify and respond to potential threats.

Collaborate with Industry and Governments: Collaboration between industry and government can ensure that AI is developed and deployed in a responsible and safe manner. By doing so, this could help to establish best practices and standards for the use of AI.

Develop AI Defence Mechanisms: Developing AI defence mechanisms could help to protect against AI threats. These mechanisms include measures such as anomaly detection, intrusion detection, and access control.

Implement AI Safety Features: Implementing safety features in AI systems mitigates the risks associated with AI and these features include fail-safe mechanisms, redundancy, and error correction.

Monitor and Regulate AI: Monitoring and regulating the use of AI is important. AI systems should be continuously monitored to detect any unusual or unexpected behavior. This includes monitoring for any attempts to manipulate the system or compromise its data.

Future of ChatGPT in Cybersecurity

As an AI language model, the future of ChatGPT is exciting and filled with endless possibilities. Here are some potential developments that could shape the future of ChatGPT:

Improved Conversational Abilities: ChatGPT could be developed to have more sophisticated conversational abilities such as emotional intelligence, humor, and context-awareness. ChatGPT can be trained on vast amounts of text data and can learn to mimic human conversation patterns, making it possible to develop more realistic and engaging chatbots.

Integration with other AI technologies: ChatGPT could be integrated with other AI technologies such as speech recognition, image recognition, and machine learning algorithms, to provide a comprehensive and personalised experience for users.

Enhanced Security Features: ChatGPT could be developed with enhanced security features to protect user data and prevent potential security breaches. ChatGPT could be used to develop security chatbots that can interact with users and provide assistance in real-time. These chatbots can be used to answer common security-related queries, provide guidance on secure practices, and help users troubleshoot security issues.

Adoption by Businesses: ChatGPT could be adopted by businesses to provide better customer support and enhanced customer experience that leads to increased efficiency and productivity. An example would be a personalised customer service chatbots that can interact with customers in a more human-like manner.

Summary

ChatGPT is capable of generating human-like responses to a wide range of questions and topics. It is constantly learning and improving based on the data it receives and strives to provide accurate and relevant information to its users. However, as ChatGPT’s maturity increases, cyber attackers could use it to launch cyber attacks that are difficult to protect against, leading to a growing need for organisations to employ AI defences to level the playing field. Organisations will need to implement a comprehensive cybersecurity strategy that includes a range of tools, techniques, and best practices to effectively protect against such attacks. Ultimately, cybersecurity is a team effort, and everyone in the organisation needs to be aware of the risks and take steps to mitigate them.

BDO Cybersecurity Team has developed a track record over the years by providing cyber security services like incident response, network penetration testing, physical security, application security, and security research in various industries. Contact us to learn more on how to better manage and mitigate Cybersecurity risks for a safer mind through our services.

References

https://blogs.blackberry.com/en/2023/03/the-growing-influence-of-chatgpt

https://www.csa.gov.sg/Tips-Resource/publications/cybersense/2023/chatgpt---learning-enough-to-be-dangerous

https://www.cshub.com/attacks/articles/chatgpt-cyber-attack-threat

https://www.entrepreneur.com/science-technology/what-does-chatgpt-mean-for-the-future-of-business/445020#:~:text=ChatGPT%20has%20the%20potential%20to,time%20and%20resources%20are%20allocated

https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/